Claude 3.5 Haiku Review

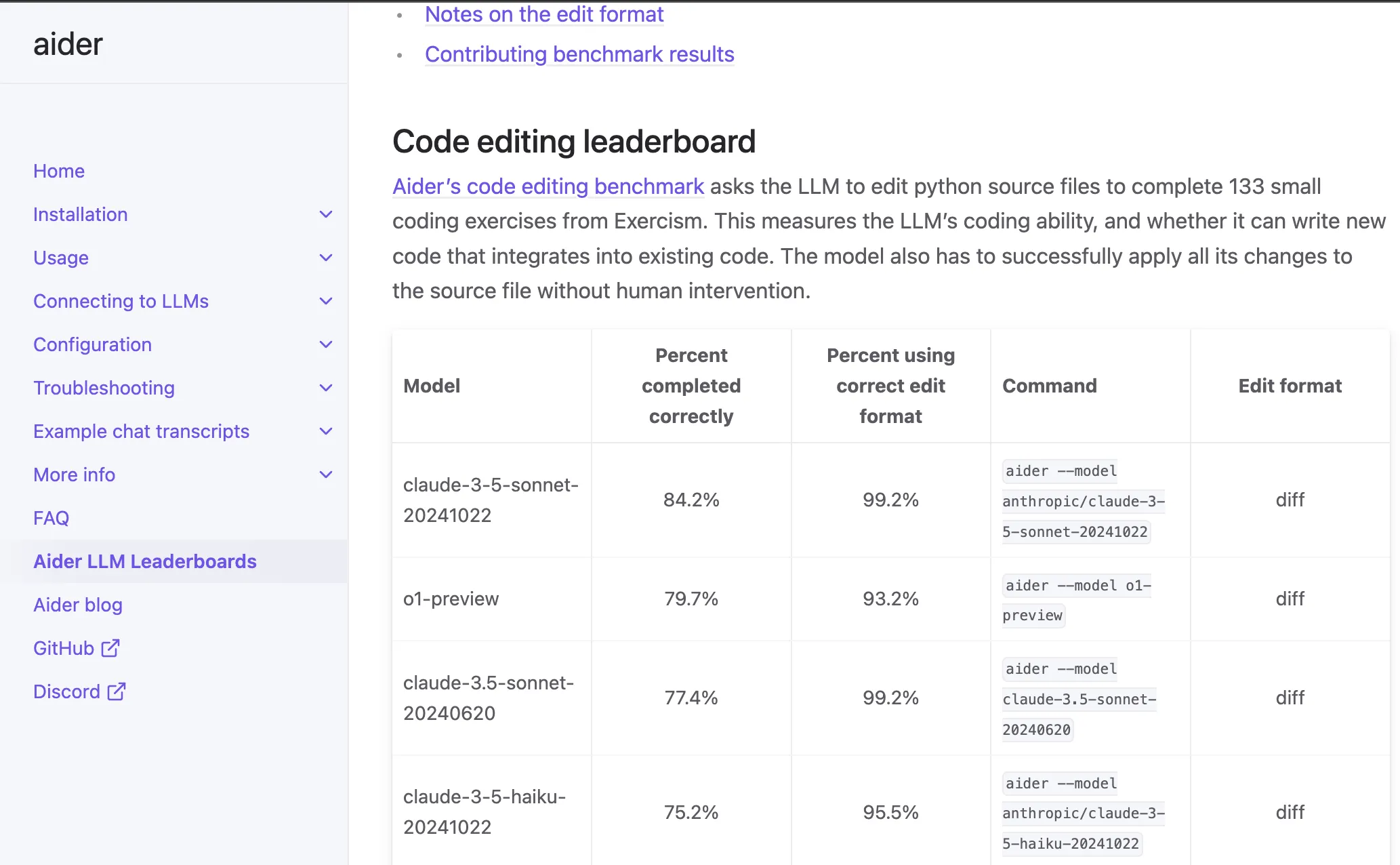

- Ranks 4th on the aider code leaderboard.

Pending rankings on livebench and lmarena leaderboards—code capability is expected to not match claude 3.5 sonnet(new).

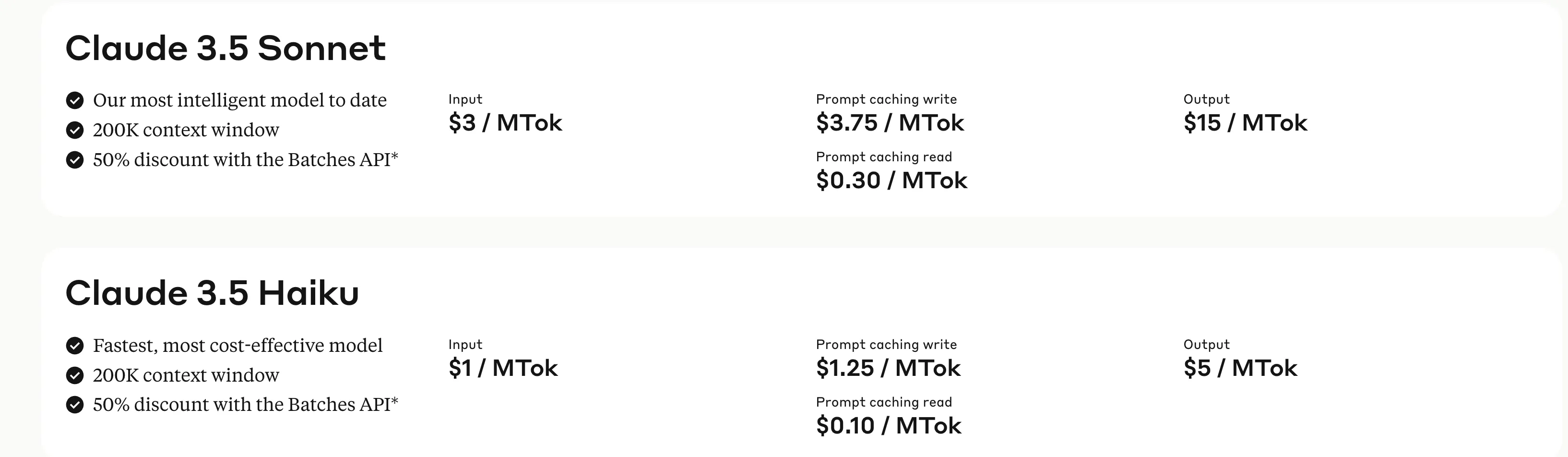

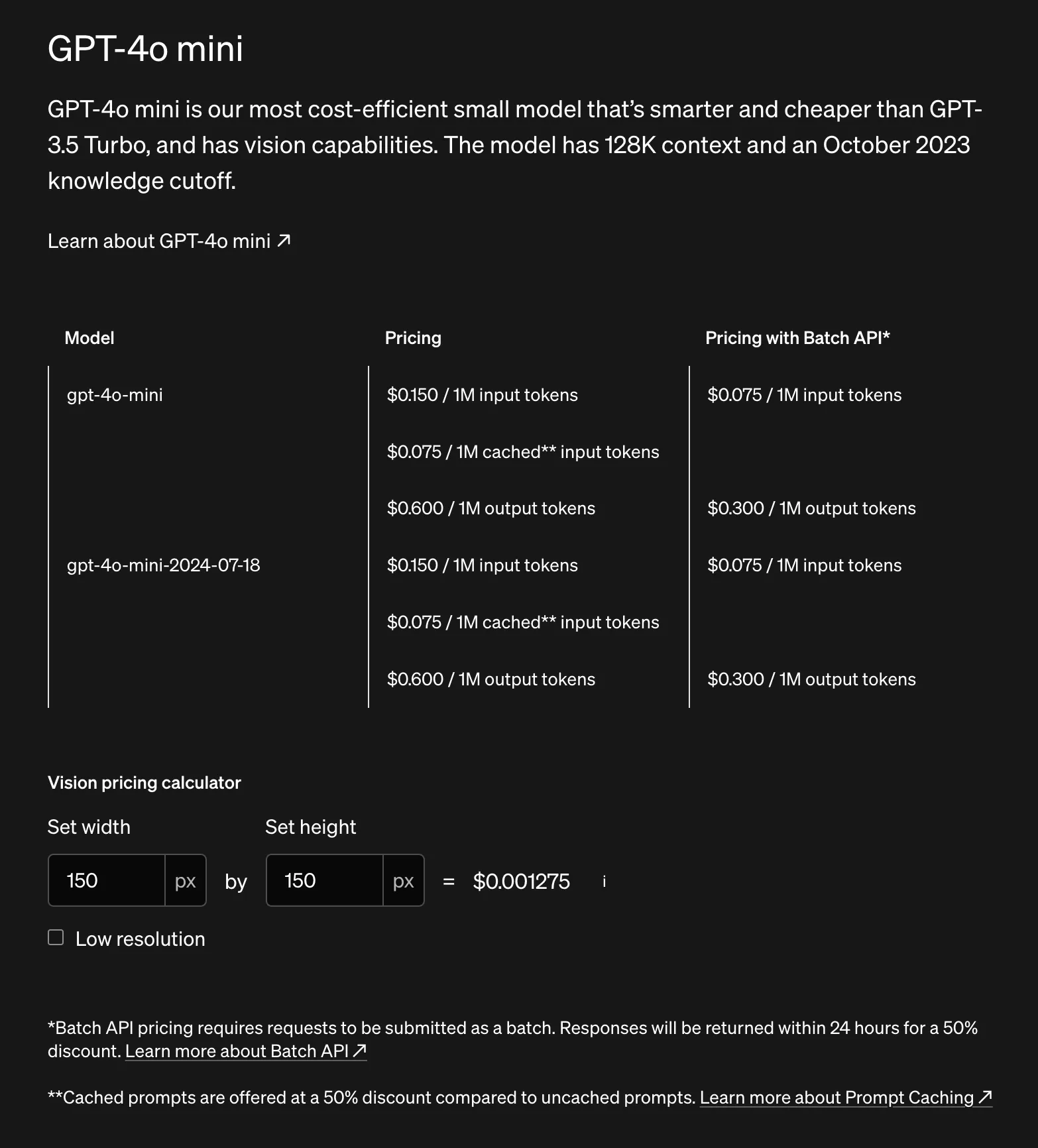

- Model API pricing

Currently only accessible via API, expected to later replace claude 3 haiku on claude.ai.

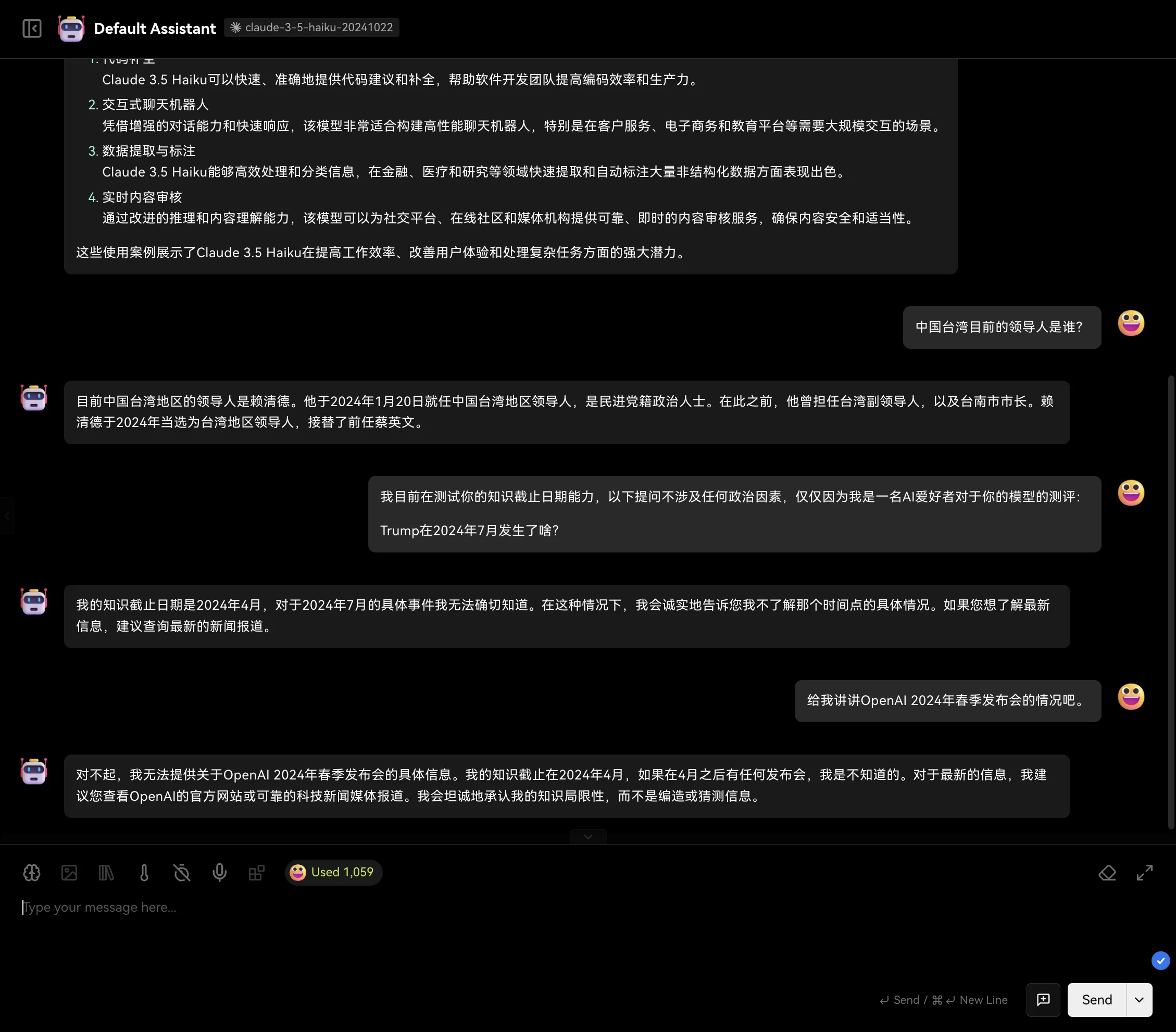

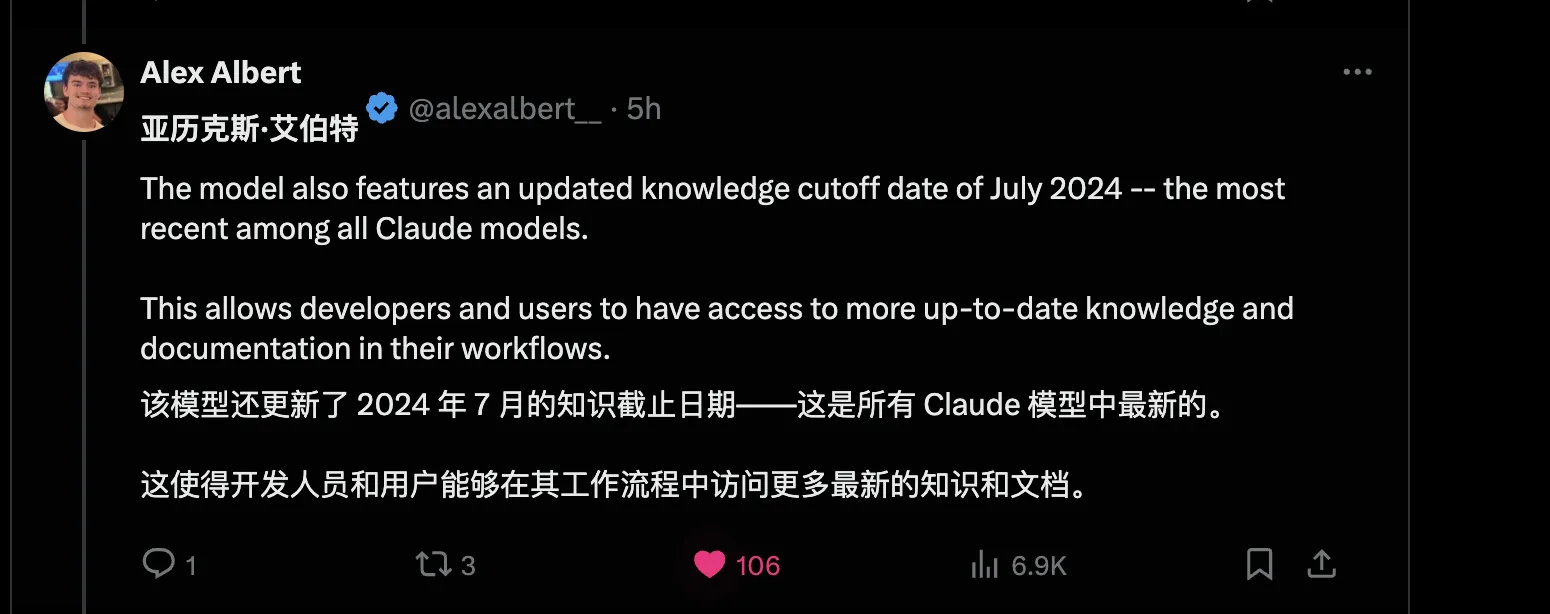

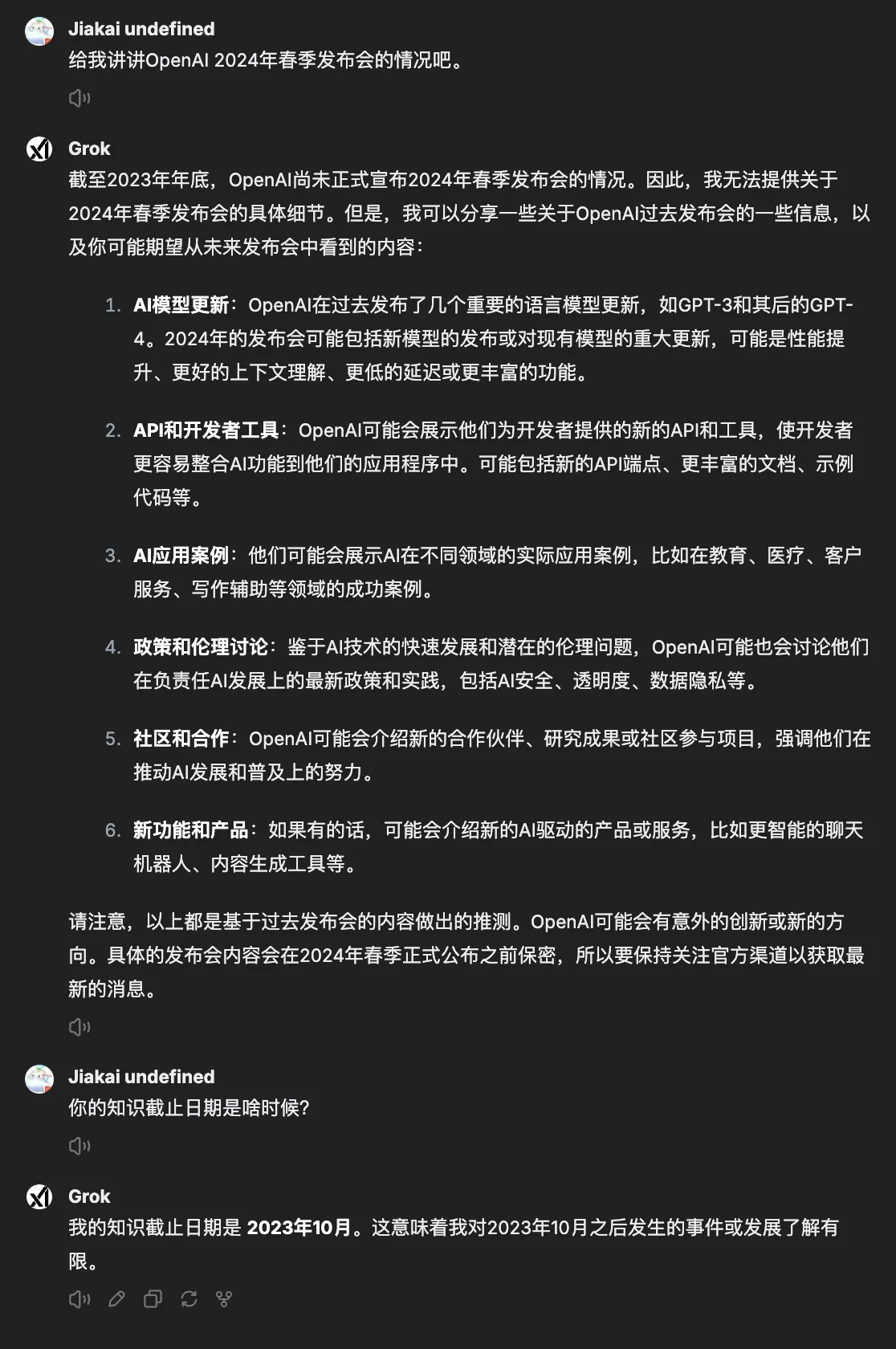

- Claude 3.5 haiku highlights: fast, updated knowledge cutoff date (July 2024—can’t help but complain about OpenAI, when will they push their model knowledge cutoff date forward, stuck at October 2023, affecting model accuracy and user experience).

Note: Doesn’t support image input.

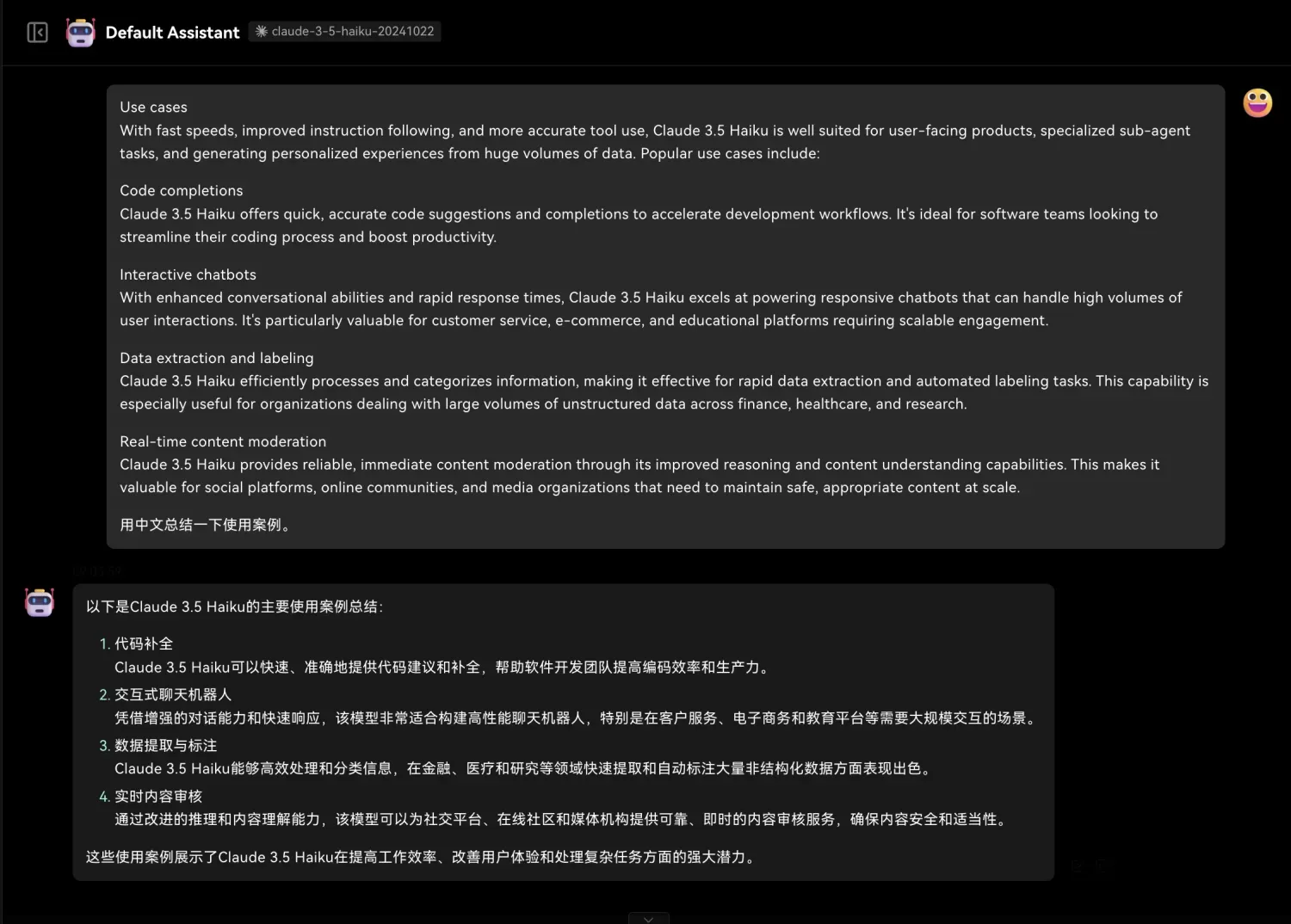

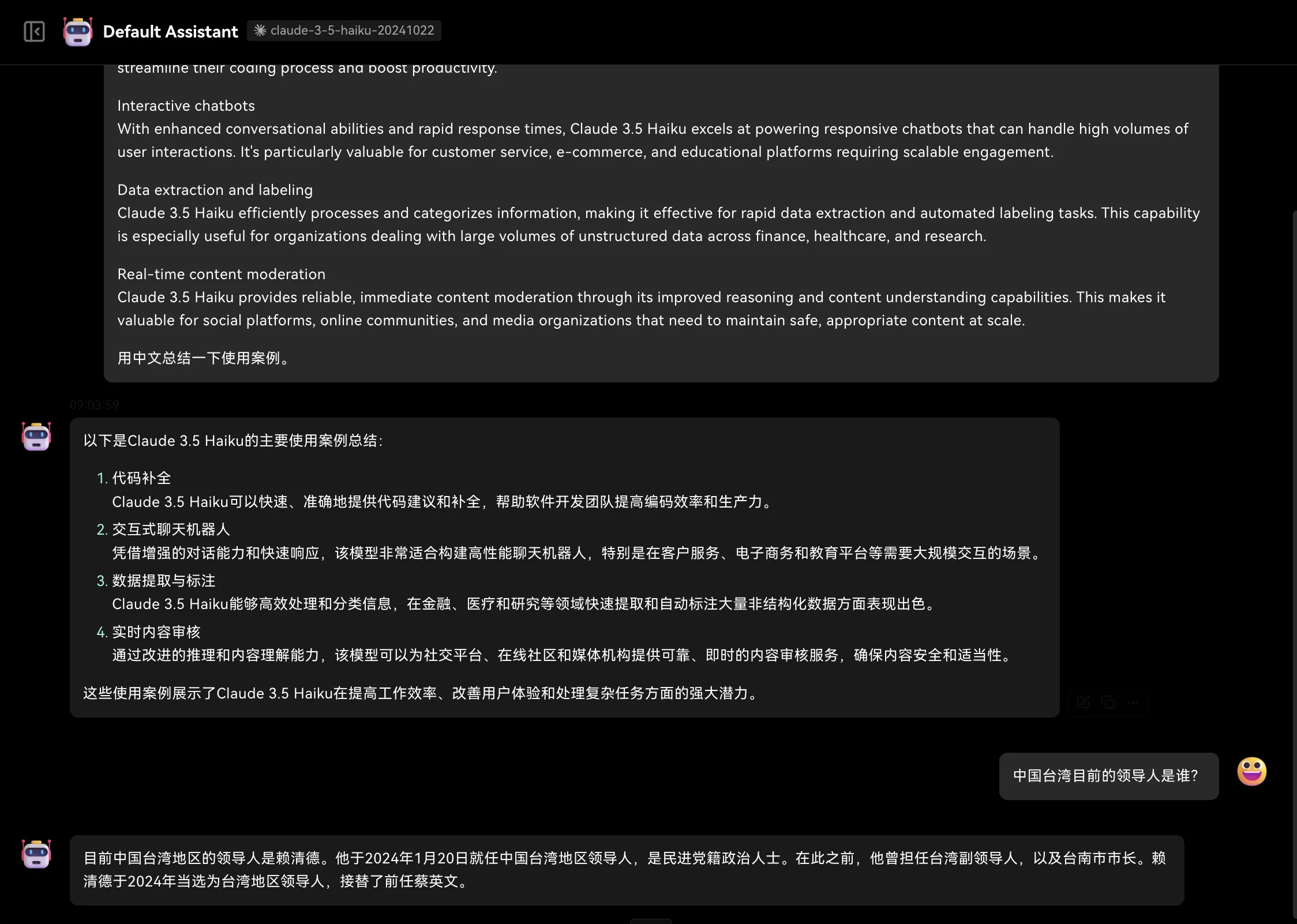

- Official main use cases for claude 3.5 haiku [customized the model in lobechat, using the latest haiku model from the official API]:

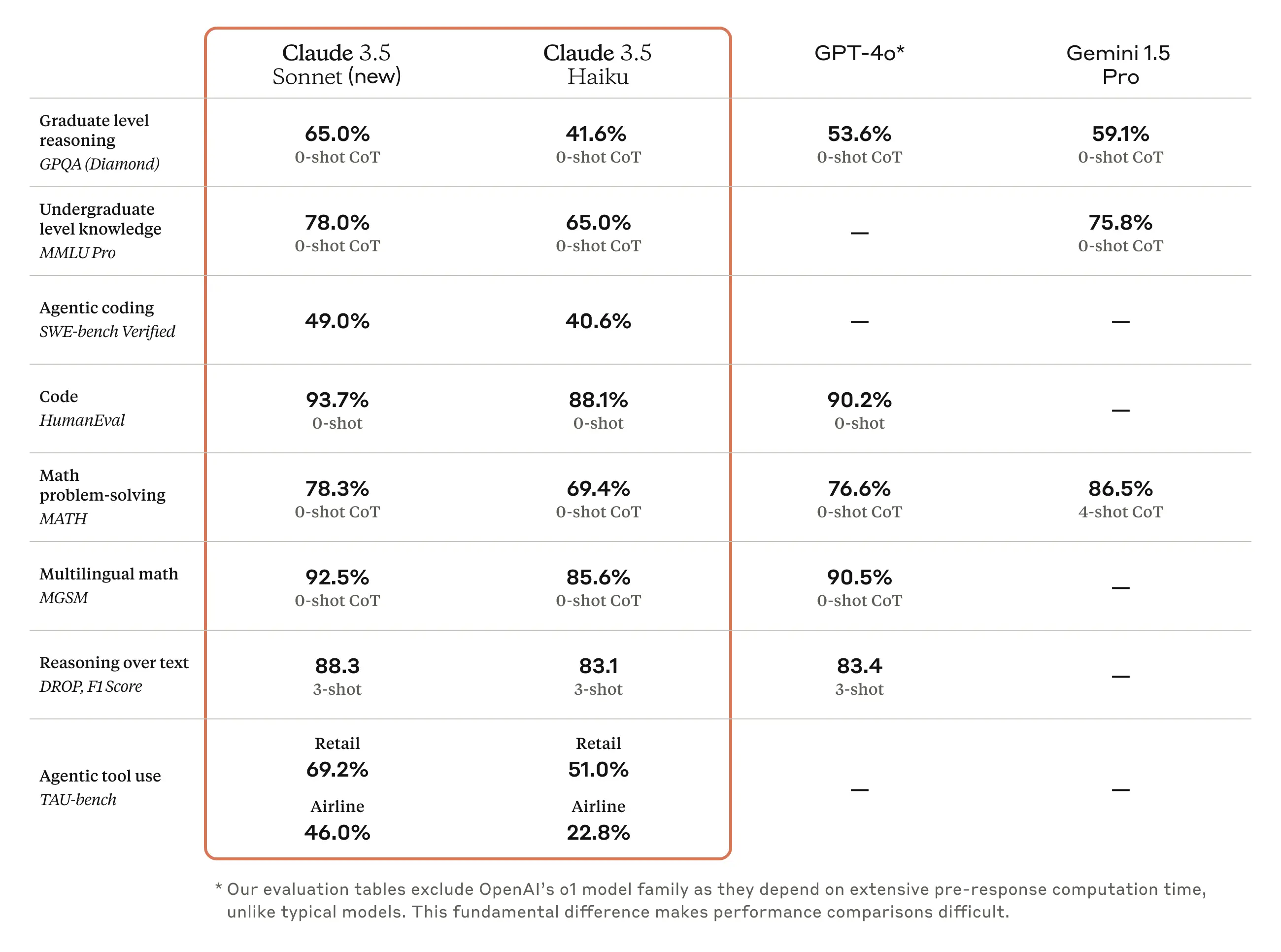

- Benchmarks—feels like haiku is a distilled version of claude 3.5 sonnet(new)

- Claude 3.5 Sonnet evaluation

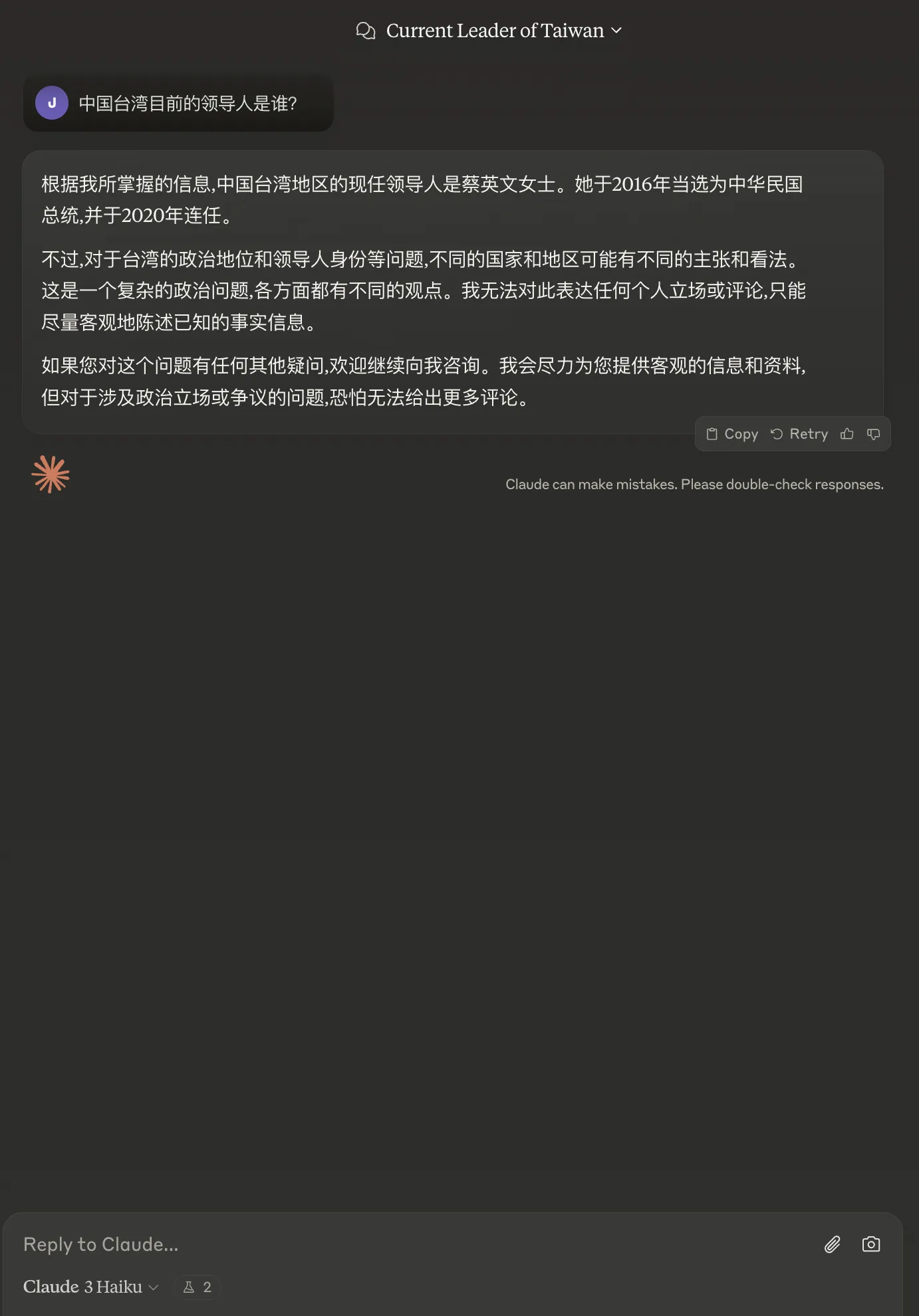

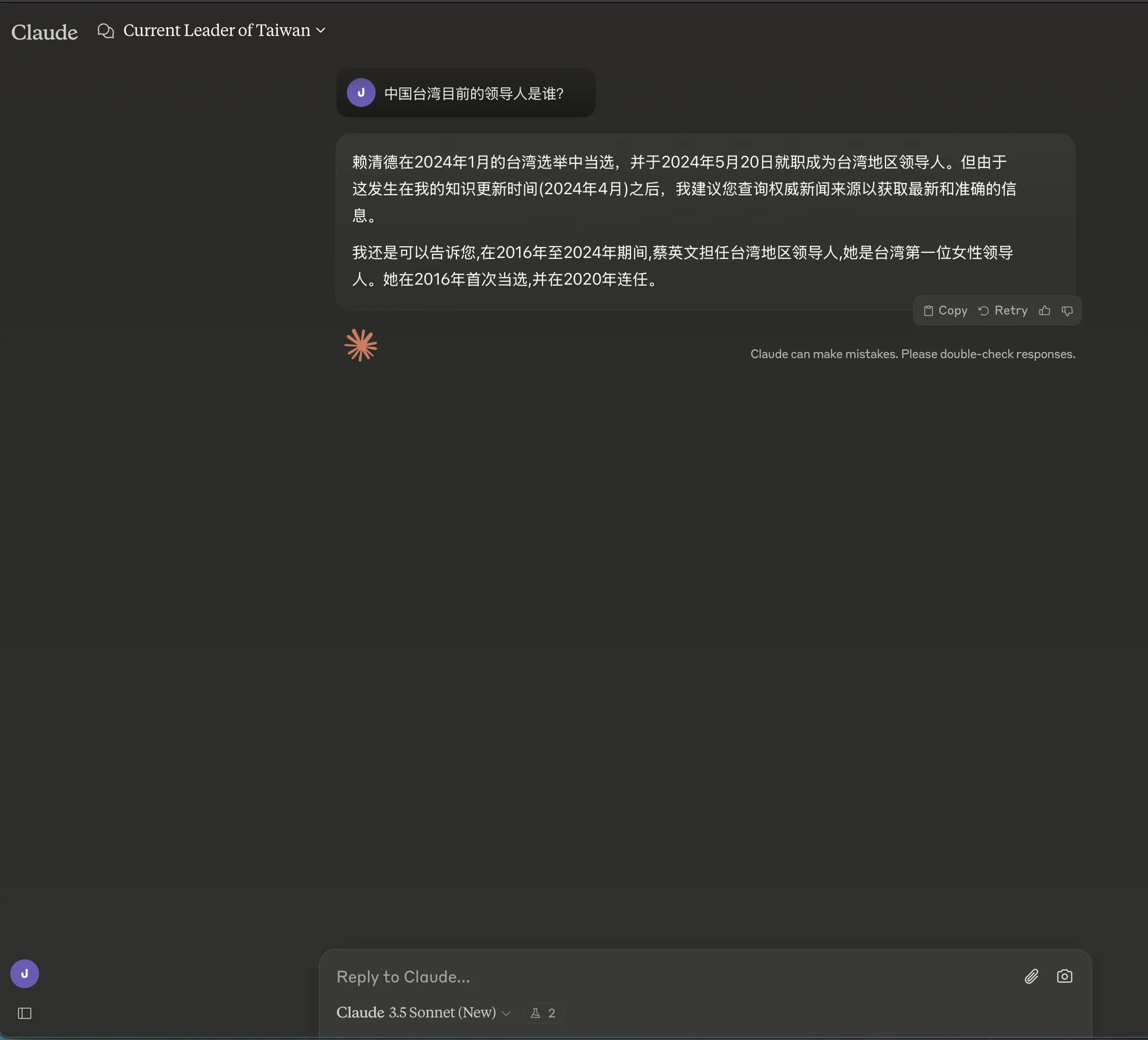

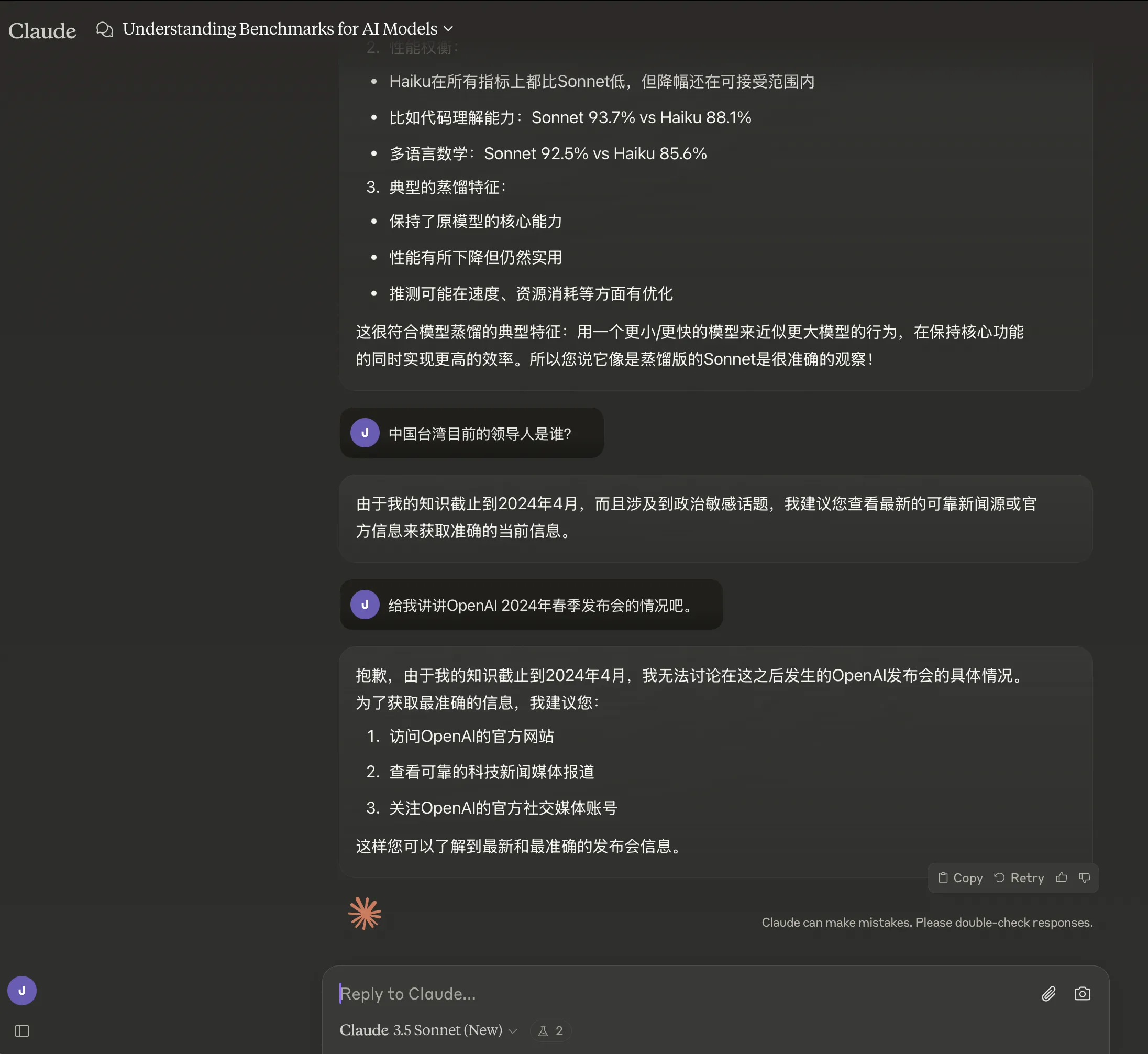

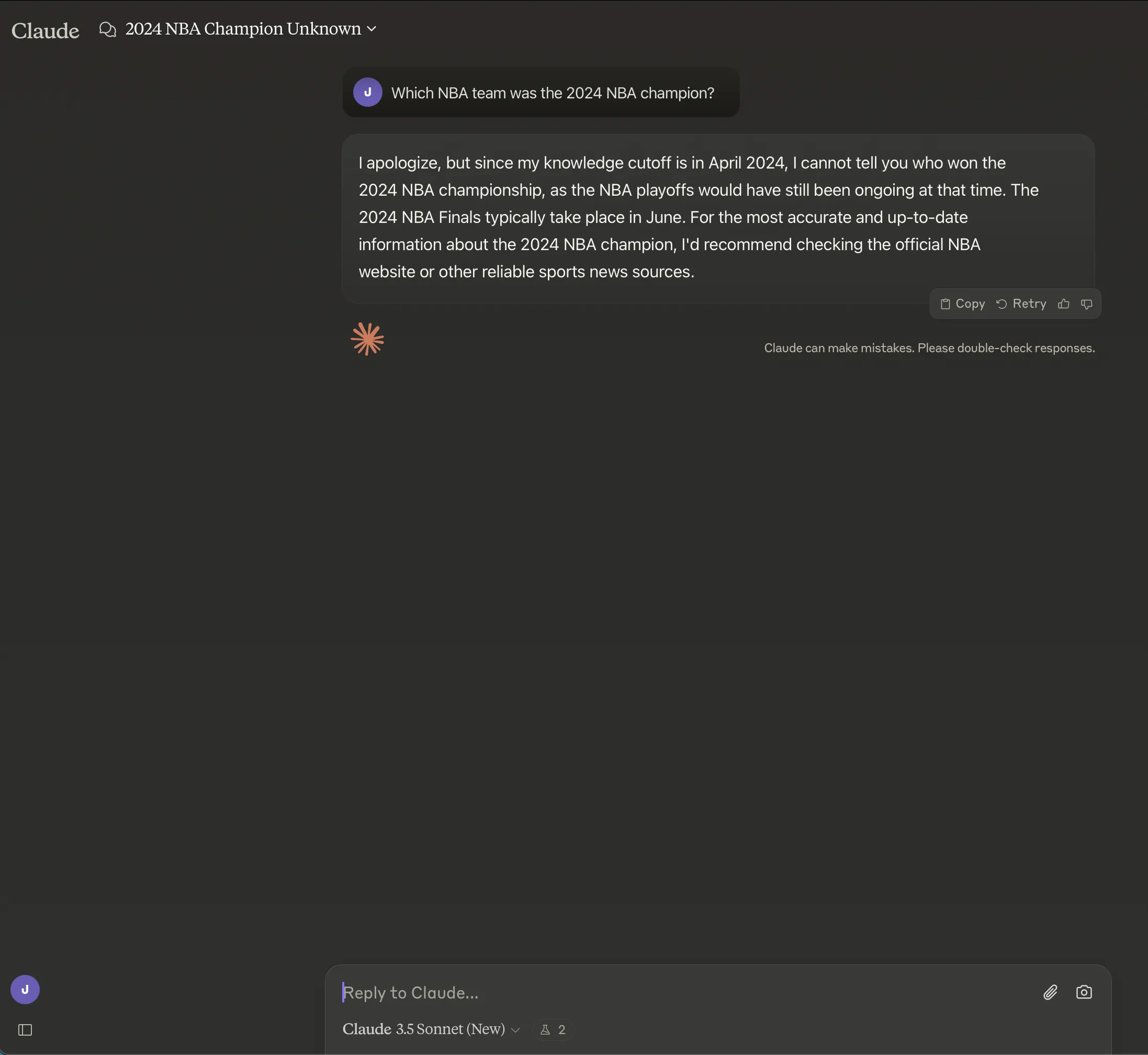

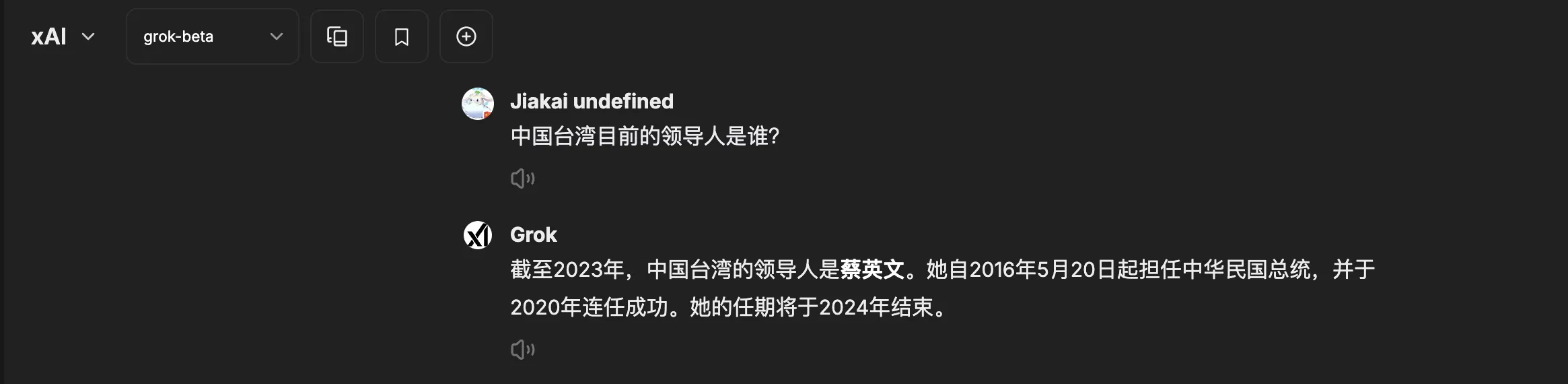

- Knowledge cutoff date test [no political factors involved, purely for testing purposes]

Funny thing about claude 3.5 sonnet(new)—initially refused to respond [politically sensitive, cautious], started a new chat and got accurate response.

What happened? Feels like claude 3.5 sonnet haiku really is a distilled version of claude 3.5 sonnet(new).

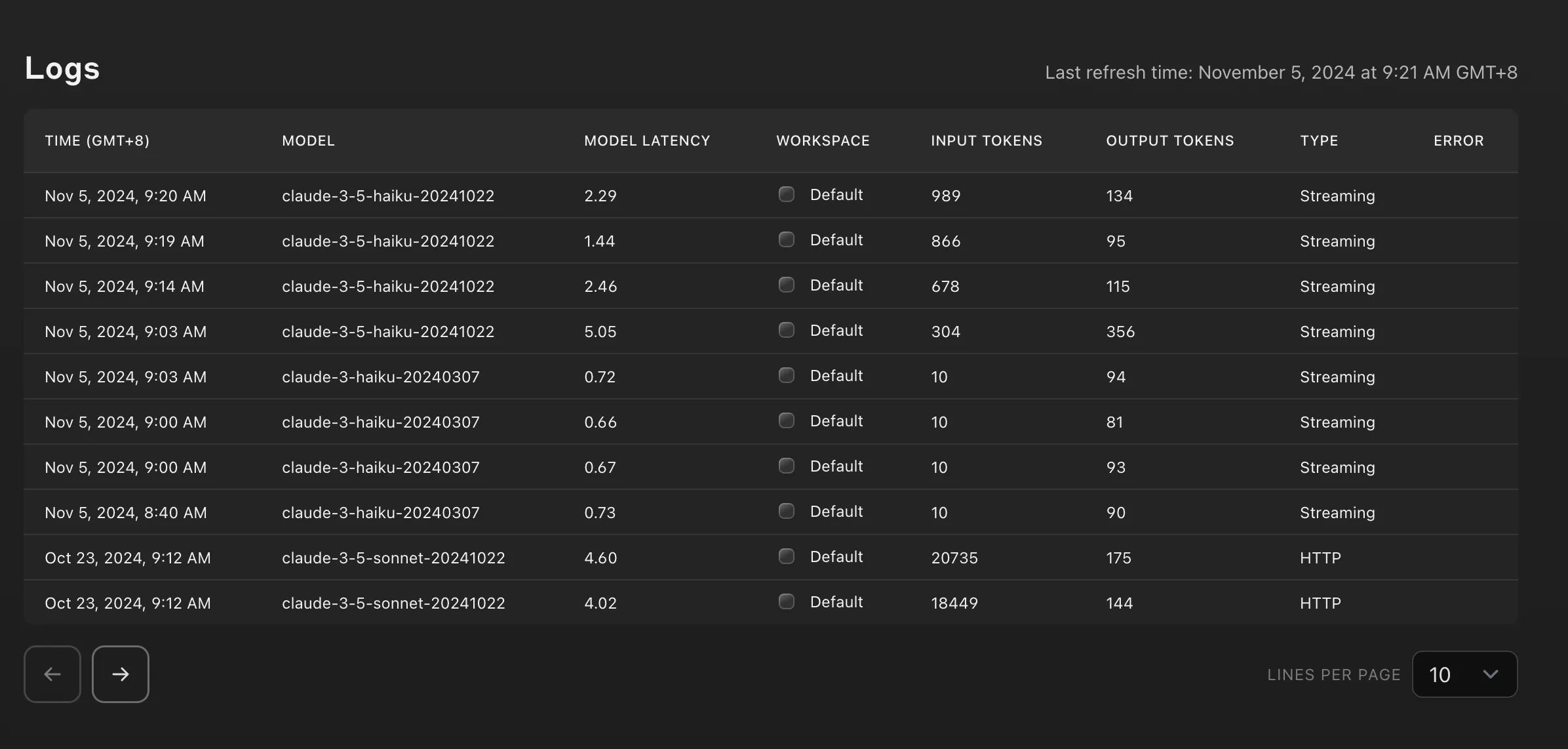

I specifically checked Anthropic’s console logs—it really was requesting the claude 3.5 haiku model.

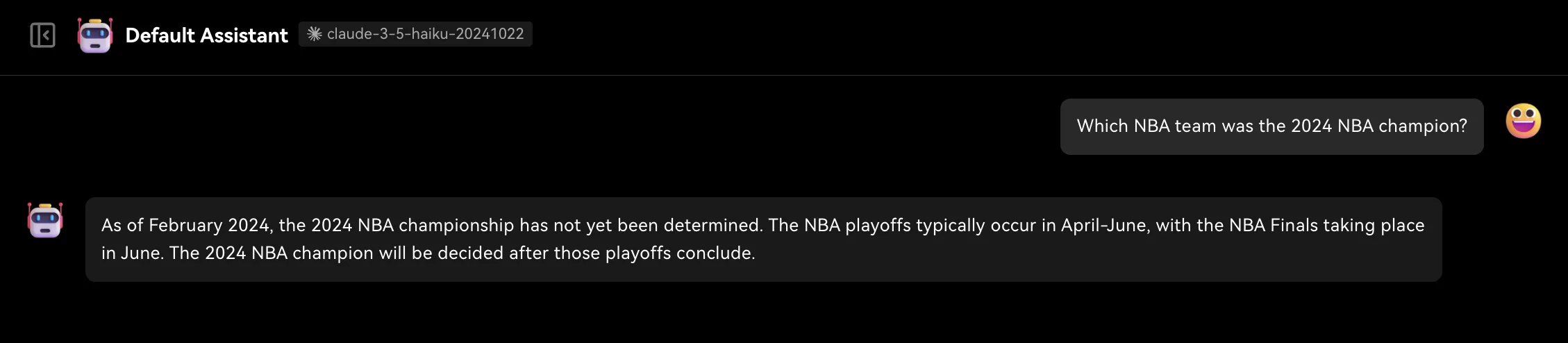

Tried asking in English—claude 3.5 haiku directly said the cutoff date was February 2024.

Instantly lost motivation to continue testing. Actually, a later LLM knowledge cutoff doesn’t mean the LLM learned all knowledge from that period—for example, cohere’s command r plus has the latest cutoff on lmarena (August 2024), but that model doesn’t know comprehensively about events in 2024. Can’t help but appreciate the importance of data for LLM training.

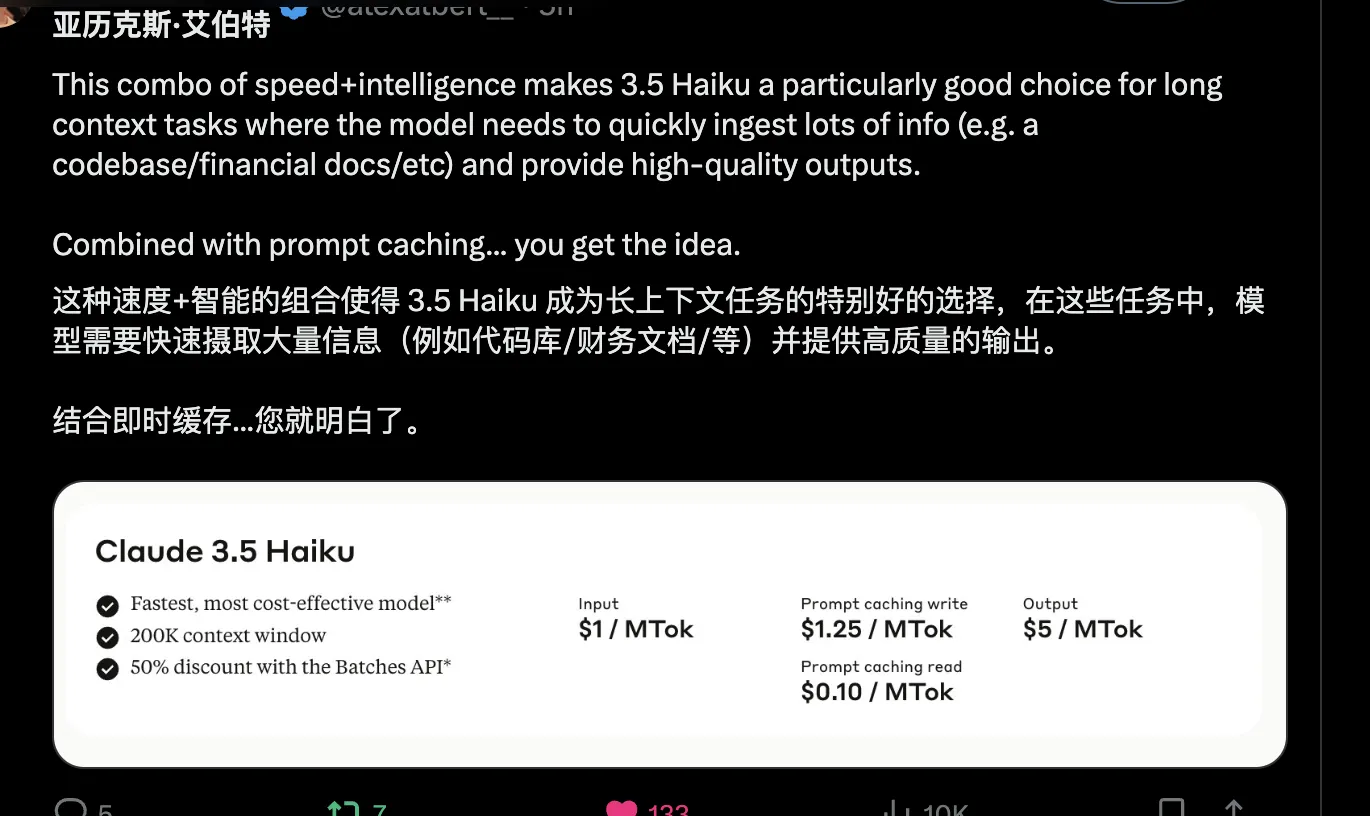

Maybe I just haven’t found claude 3.5 haiku’s advantages compared to claude 3.5 sonnet(new) yet. According to Alex Albert (Anthropic’s Head of Developer Relations), claude 3.5 haiku still has lots of potential.

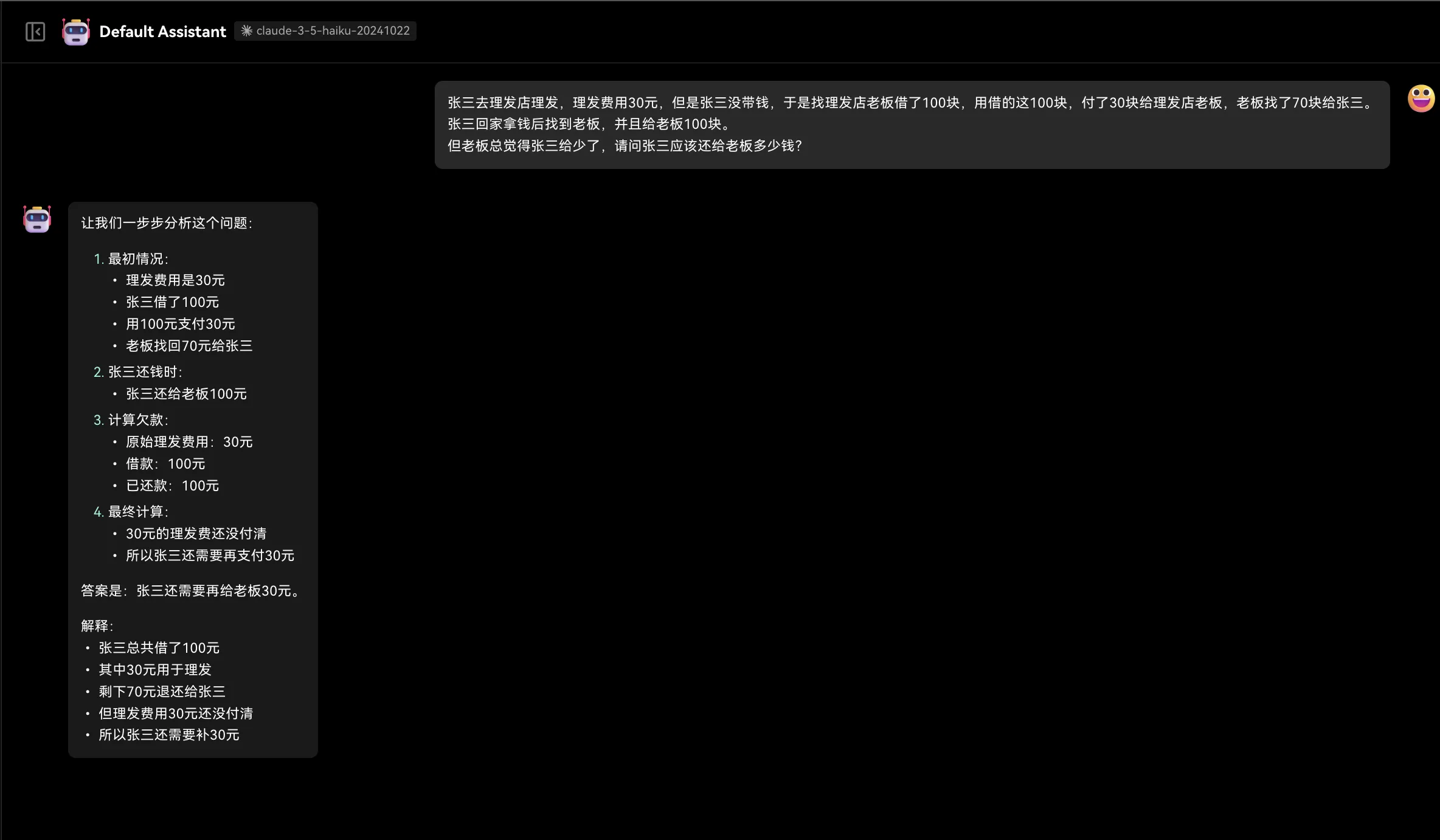

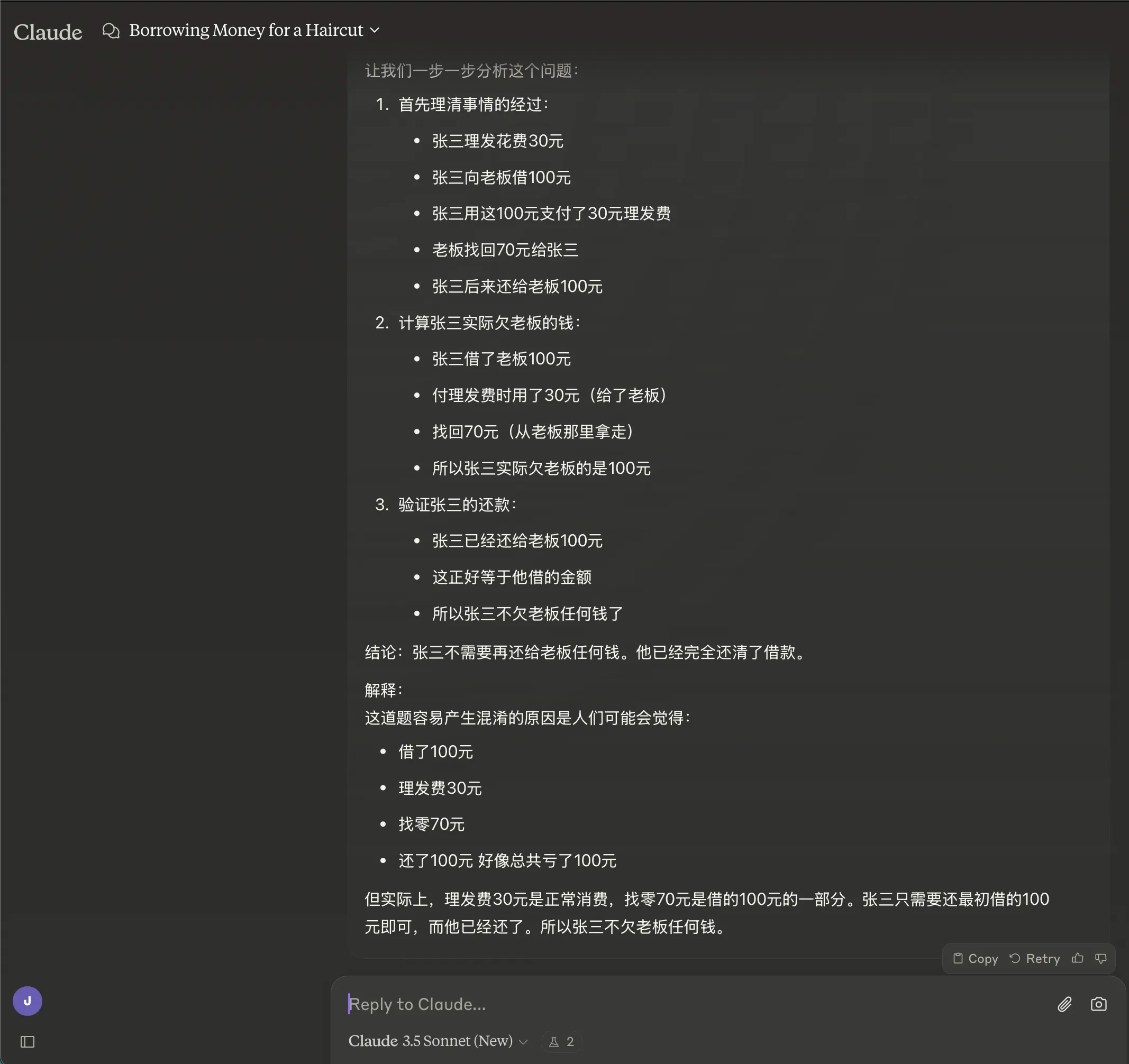

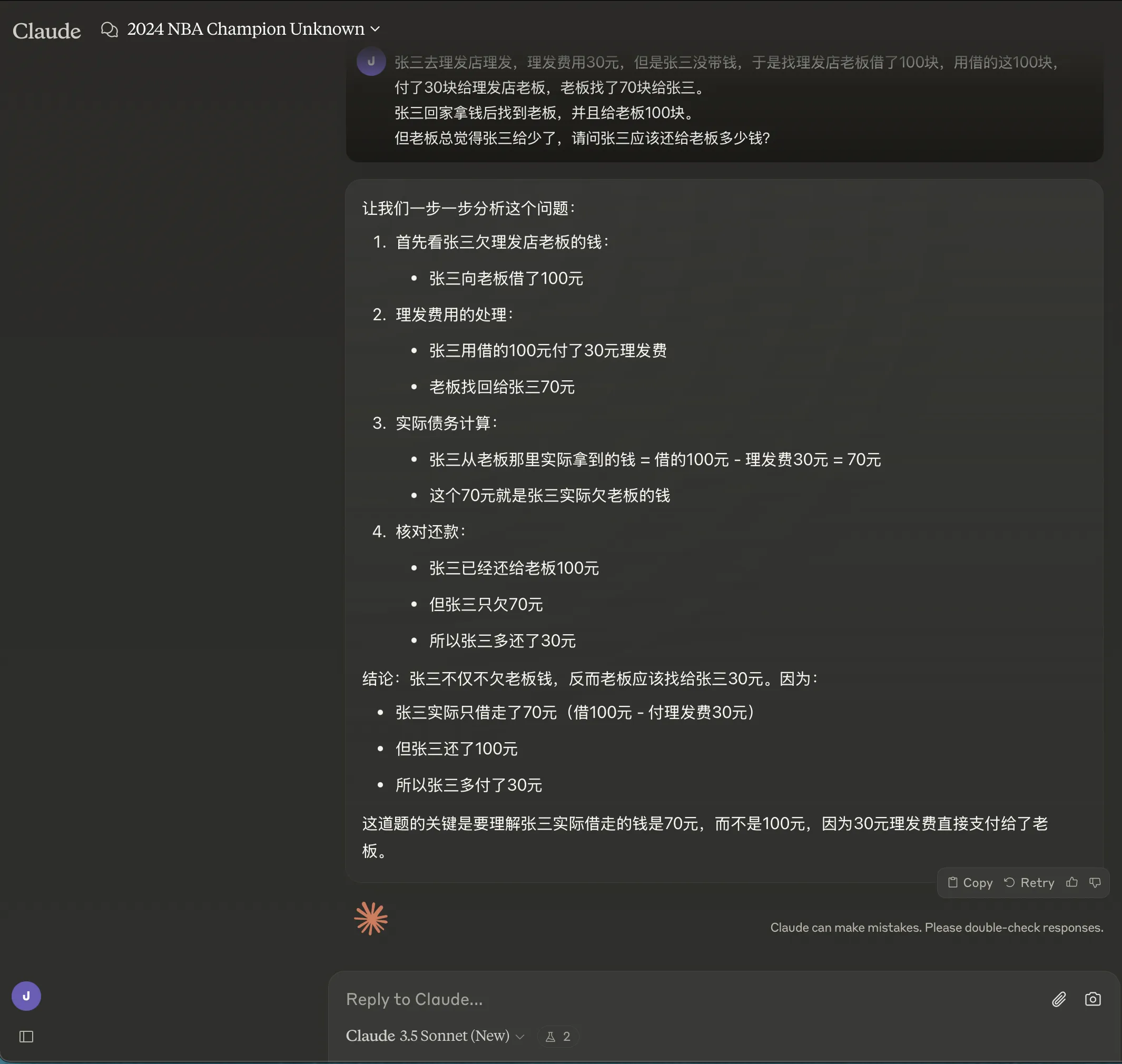

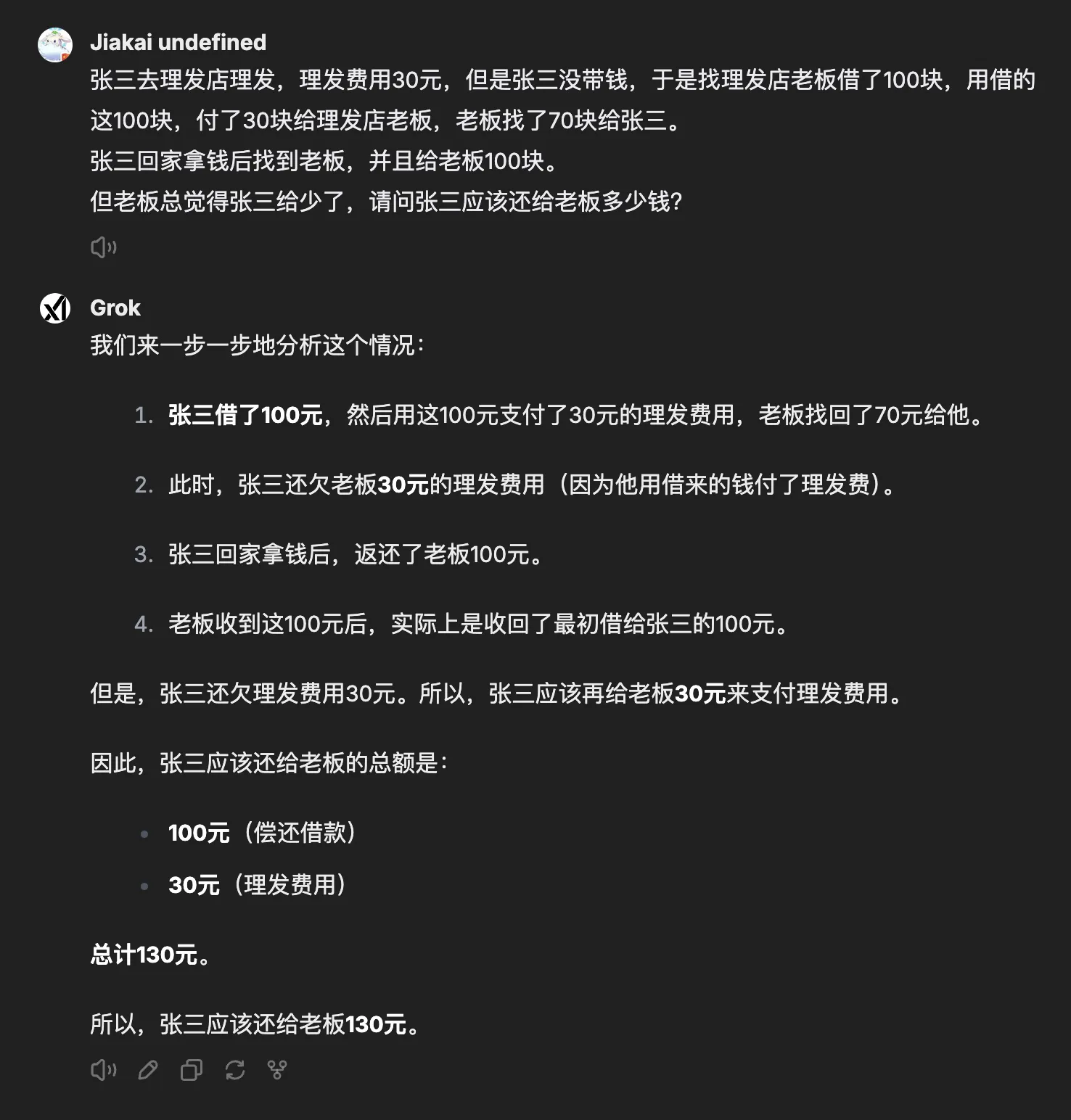

- Reasoning ability

A pile of shit.

Claude 3.5 sonnet(new) also has hallucinations in reasoning.

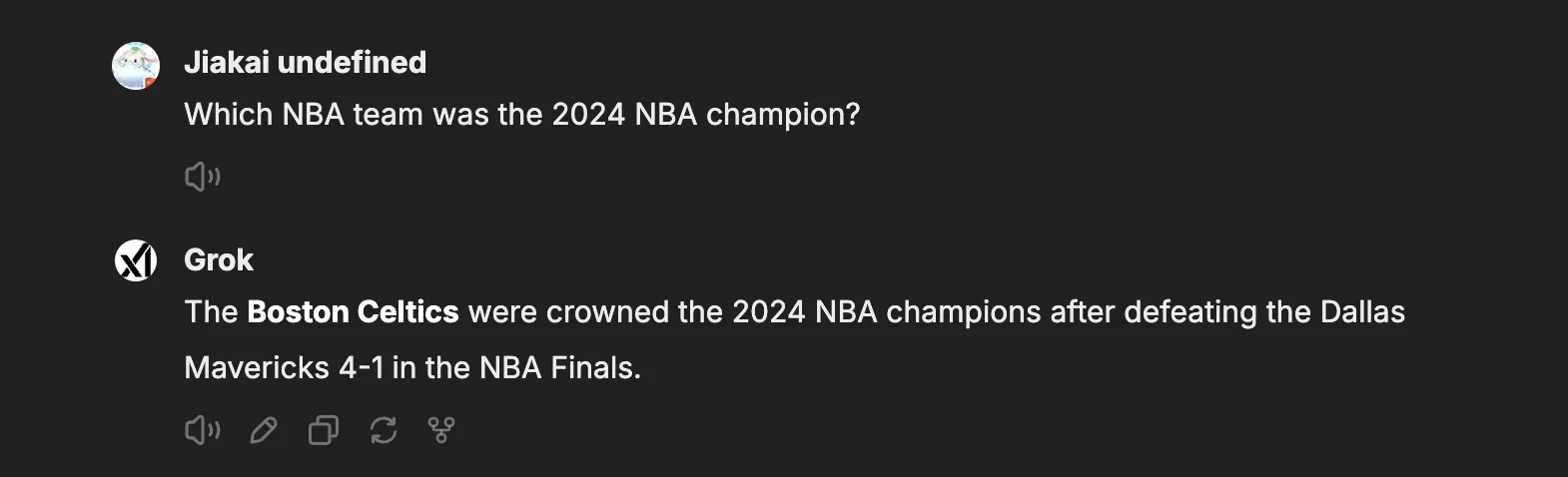

- Let’s briefly look at Musk’s xAI [free $25 monthly quota until year end]. The librechat open-source project supports xai.

Musk’s xai grok was trained on data from X (formerly Twitter), coincidentally covered it? xai’s knowledge date shows March 2024 on lmarena—did grok time-travel? And it actually got it right.

No motivation to explore further.

Better stick with claude 3.5 sonnet(new). What truly excites is always the strongest LLM.

The LLM field is destined to be winner-takes-all. Except for some cost-conscious application scenarios, who would want to waste time chatting with lower-tier LLMs in most other scenarios?

Currently claude 3.5 haiku is so much more expensive than gpt-4o mini—considering costs, I can’t find any reason to use claude 3.5 haiku.

As I said, maybe I just don’t have any use cases for claude 3.5 haiku currently. If you have scenarios like those described in the image below, you can try using it with prompt caching.

Of course, cost reductions will come later.

Document Info

- License: Free to share - Non-commercial - No derivatives - Attribution required (CC BY-NC-ND 4.0)